The Space Between Excitement and Concern: Finding My Seat at the AI Table

By Lindsey Jarrett, PhD

Vice President of Ethical AI

Over the last 18 months I have given 29 talks, compared to only 7 in the 18 months before that. This may seem like a very basic observation that could be due to a lot of different factors, all of which may be correct. But from where I sit, I see it as an explosion of interest with the topic of Ethical AI.

Early Excitement

When the Center for Practical Bioethics first started this work in 2019, we had a large sponsor that helped put the initiative in front of numerous healthcare IT leaders. There were also big companies like GE, Microsoft and IBM talking about what responsible technology should look like, and they even started using terminology that paired well with ethical principles, even if the world wasn’t quite ready to pin them to healthcare. Technology companies across the globe were starting to have serious conversations around data privacy, patient data security, biases in data, and algorithm monitoring – and getting very close to writing new policy.

Then, long story short, the pandemic rocked our healthcare systems across the globe, which essentially put everything that wasn’t directly impacted by COVID-19 moved to the back burner. However, during that same time, 2020-2022 especially, we had a surge in diversity, equity, inclusion and justice (DEIJ)-related initiatives that could be primarily pinned to a fateful event in Minneapolis, Minnesota, that had far-reaching consequences across American policing. I believe that these events in 2020 shook how people understood the strength of our social systems and sped up our need to unpack the layers of their infrastructure.

Harm or Good?

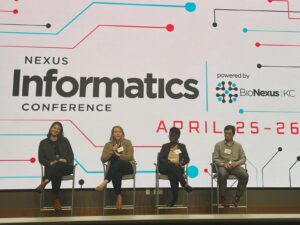

Now enter today, 2024, where DEIJ initiatives are fighting for protection, and a lot of people are excited about AI, especially given the nearly two years of Chat GPT use across the globe. Given this, you might think, who would want the Center for Practical Bioethics to come and talk about Ethical AI? It’s a question that I ask myself almost daily, especially as my position often puts me in rooms where I sit in the middle between those who want to convince me that AI is built for good now, and those who are terrified that it will do way more harm than good.

Now enter today, 2024, where DEIJ initiatives are fighting for protection, and a lot of people are excited about AI, especially given the nearly two years of Chat GPT use across the globe. Given this, you might think, who would want the Center for Practical Bioethics to come and talk about Ethical AI? It’s a question that I ask myself almost daily, especially as my position often puts me in rooms where I sit in the middle between those who want to convince me that AI is built for good now, and those who are terrified that it will do way more harm than good.

My seat in the middle is a complicated one, because I do believe that technology, even AI-enabled ones, can create some really great change, especially for people who need technology in important areas of their lives. I also believe, though, that technology is often built to solve the wrong problem and often perpetuates faulty decisions by humans. These thoughts have created a dilemma that has kept me in that middle seat over the years as I navigate community partners across healthcare and the growing sphere of healthcare technology. This dilemma has created a space between excitement and concern and what I’ve seen across the audiences of my talks over the last 18 months has pointed to the fact that I’m not operating in this space alone.

Back to the Future

The audience that sits before me on my monitor during a virtual talk or the ones who sit in front of me at in-person events hold a variety of perspectives on this topic. Despite the differences in AI knowledge, most of the people I have met through my talks have mixed feelings when it comes to AI. Over the last 18 months I have given talks to caregivers, students, healthcare providers, IT professionals, healthcare administrators, risk managers, payors, librarians, investors and researchers. These folks represent private companies, public organizations, large academic medical centers, start-ups, small medical clinics, advocacy groups, federal institutions, universities, rehabilitation clinics, large tech firms, and small provider groups.

The audience that sits before me on my monitor during a virtual talk or the ones who sit in front of me at in-person events hold a variety of perspectives on this topic. Despite the differences in AI knowledge, most of the people I have met through my talks have mixed feelings when it comes to AI. Over the last 18 months I have given talks to caregivers, students, healthcare providers, IT professionals, healthcare administrators, risk managers, payors, librarians, investors and researchers. These folks represent private companies, public organizations, large academic medical centers, start-ups, small medical clinics, advocacy groups, federal institutions, universities, rehabilitation clinics, large tech firms, and small provider groups.

Yet, when I conclude my 45-minute presentation or my time at the mic during a panel, half the room is still excited for what AI can bring but unsure of its consequences, and half the room is concerned about AI but feels at risk if they don’t use it. This reminds me that the audiences in 2019 and 2024, despite differing in size and AI experience, have changed very little. Maybe some have switched seats from concern to excitement, but I argue that move isn’t permanent. As a scientist, I wonder if this was a missed opportunity for a study, but as a program leader, I wonder if we are all still sitting in 2019, and the events that happened between then and now are not enough to move one way or the other – which could actually create lasting change. Knowing this, I may very well ask my next audience, “Are you sitting in the same spot you were in 2019 when it comes to Ethical AI?”